The final required class I took in grad school was called “Statistical Consulting,” and unlike every other course I took I did almost no actual statistics in it. Instead, this course predominantly focused on how to take all of the fancy methods and advanced concepts I had learned over the last two years and communicate the results to people without a background in statistics.

As a policy analyst, I find myself communicating with non-statisticians more often than in school. This is an exciting opportunity to share the statistical tools I have with an audience that can meaningfully apply my results. These are some of the things I learned that have helped me better explain complicated concepts to a less technical audience.

Ask your audience for their statistical background

During my first ever consulting project as a grad student, I spent about 10 minutes of my first meeting going over the pros and cons of using a time-series approach with the client before they had the opportunity to ask me what a time-series model was. This is not to say that the discussion on the pros and cons of the time series approach was wrong or that the client didn’t need to be included in that conversation, but rather that had I known my client’s background I would have approached that discussion differently.

In this particular case, my client wasn’t interested in the statistical differences between the models I was proposing, but rather what the practical differences would be in the final report. After resetting, we were able to have a much more productive discussion that was tailored to his level of understanding.

Use visuals when possible

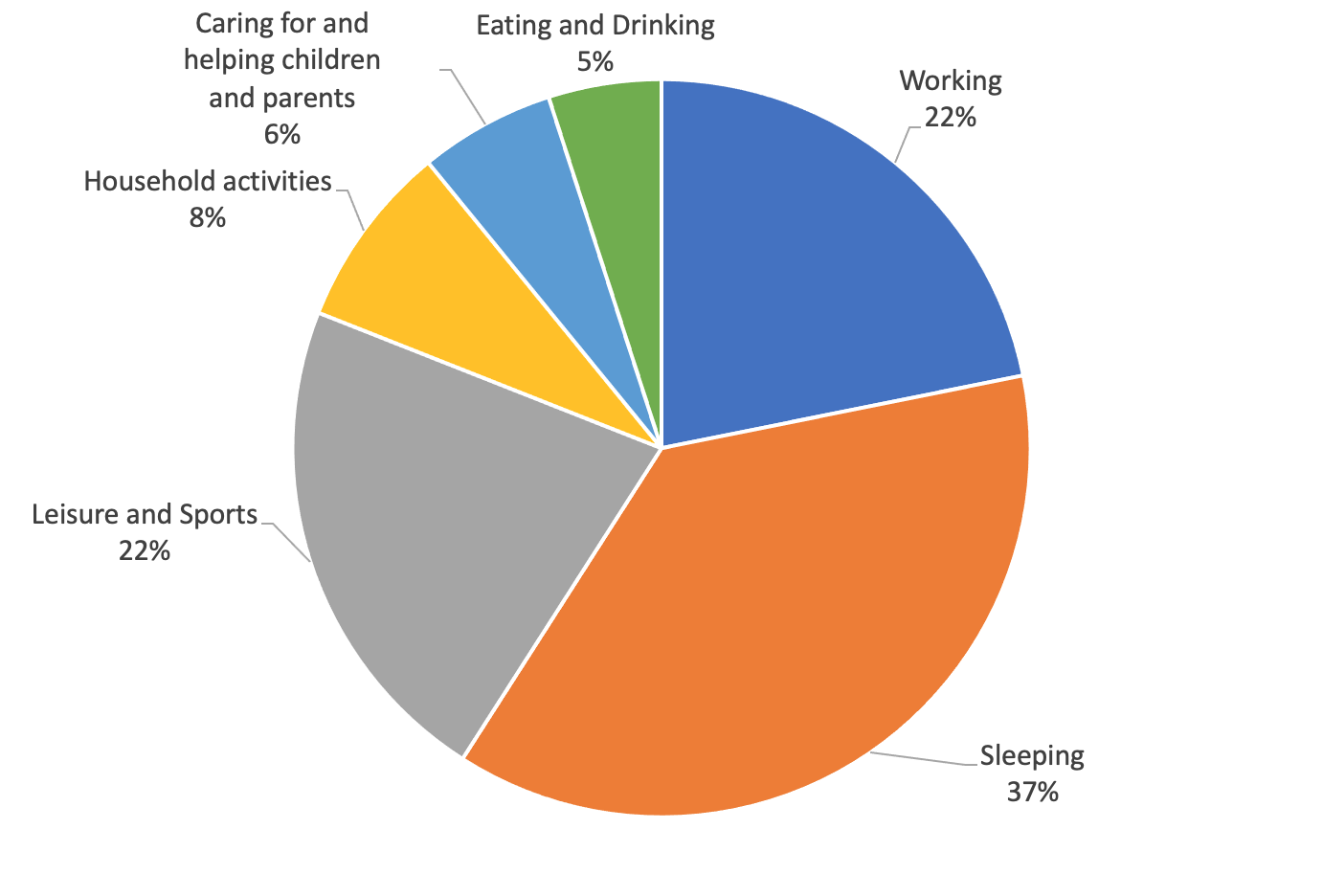

Good data visualizations can communicate a complicated idea almost instantly, especially when paired with a clear written description of the main takeaways. However, it is important to not get carried away with visualizations.

One common mistake is visualizing every possible part of an analysis. Visuals are great for highlighting the most important parts of a report. Highlighting everything might make it harder to find the most important pieces of information.

Another consideration to have is when to use a graph or a table to visualize data. A general rule of thumb is to use tables when the specific value of a result is important and graphs when the broader trend is important.

Provide context

Statistics never exist in a vacuum, and it is important to provide context in order to make your results more useful. Being upfront about what data or methods you used, what the strengths or weaknesses of your approach were, these are the sort of non-result pieces of information that help people understand the full picture of an analysis.

Be honest

There are a whole lot of approaches we can use when analyzing data, and some of these approaches might allow for different interpretations of the results. Statistics sometimes gets viewed as a scientific and objective way to examine the world around us (which it largely is), but the perspective of the analyst has a lot of weight in determining the final message.

Always make sure you are being clear about the assumptions your models make, and the limitations of your results. It goes without saying that intentionally misleading charts or excluding critical information has no place in any respectable analysis.